The Hidden Cost of ChatGPT Health: Why OpenAI’s New Play is a Trojan Horse for Pharma

OpenAI's ChatGPT Health launch isn't about patient care; it's about data acquisition. Unpacking the real winners in this new era of AI diagnostics.

Key Takeaways

- •ChatGPT Health's core value is not patient service, but massive, real-time data aggregation for private entities.

- •The launch erodes traditional medical gatekeeping, risking the proliferation of statistically probable but contextually flawed advice.

- •A two-tiered healthcare system based on human vs. AI reliance is the most likely near-term outcome.

- •Regulatory bodies are lagging far behind the speed of this technological integration.

The headlines scream progress: ChatGPT Health has arrived, promising to revolutionize patient interaction and diagnostic support. But before we rush to embrace this shiny new digital doctor, we must ask the uncomfortable question: Who is truly benefiting from this massive data ingestion? This isn't a simple software update; it is a fundamental restructuring of medical information power, and the conversation around AI in healthcare is dangerously superficial.

The Unspoken Truth: Data is the New Stethoscope

Every interaction a patient has with ChatGPT Health—every symptom described, every history shared, every follow-up question asked—is a data point. Unlike encrypted hospital systems bound by HIPAA (though even those have vulnerabilities), this is large language model training data, aggregated and refined by a private entity whose primary shareholders are not healthcare providers, but tech investors. The immediate winner here is not the patient seeking quick answers, but the entity controlling the model. They are building the most comprehensive, real-time, anonymized (or pseudo-anonymized) dataset of human illness ever assembled.

The real agenda lurking beneath the surface of this healthcare AI launch is predictive modeling for pharmaceutical intervention and insurance risk assessment. Imagine a drug company knowing, six months before traditional reporting, that an LLM is seeing a surge in specific symptom clusters in a certain demographic. That is market intelligence worth billions. The promise of better triage is the sugar coating on a massive data grab.

Deep Analysis: The Erosion of Medical Gatekeeping

For decades, the medical establishment, for better or worse, acted as the gatekeeper of health information. ChatGPT Health bypasses this entirely. While democratization of information sounds noble, the quality control evaporates. We are trading expert, contextualized advice for statistically probable responses generated by algorithms trained on the entirety of the public web—including the vast swamps of medical misinformation. This is where the risk of trusting AI diagnostics becomes existential. A subtle misinterpretation by the model, fed back into the system, can rapidly create a self-fulfilling prophecy of flawed medical consensus.

Consider the economic shift. If primary care physicians begin relying on these tools for initial assessments, the value proposition of general practice changes overnight. This isn't just about efficiency; it’s about shifting liability and accountability onto a black box system that cannot be cross-examined in the same way a human clinician can. (For context on the regulatory challenges facing this sector, see the FDA's evolving stance on medical software.)

What Happens Next? The Prediction

My bold prediction is this: Within 18 months, we will see two distinct classes of medical care emerge. Class One: The wealthy will pay premiums for human-only, verified medical consultation, viewing AI as a dangerous shortcut. Class Two: Under-insured or underserved populations will become the primary beta testers for ChatGPT Health, their data fueling the model's accuracy, while simultaneously exposing them to the highest risks of algorithmic error. Furthermore, insurance carriers will begin to subtly—or overtly—favor diagnoses generated by approved LLMs, creating a systemic bias toward algorithmic acceptance over human judgment to reduce payout complexity.

The Future of Medical Trust

The debate shouldn't be 'Is ChatGPT Health safe?' but 'Is OpenAI the appropriate steward of our most sensitive personal information?' The answer, judging by their track record in content moderation and data handling, should give every user pause. We must demand radical transparency regarding data usage agreements before we let this technology become the first stop on our path to wellness. The convenience is addictive, but the price tag is potentially our medical autonomy.

Frequently Asked Questions

What is the primary concern regarding ChatGPT Health's data usage?

The primary concern is that patient interactions are being used to train proprietary large language models, creating an unparalleled dataset for private corporations, potentially impacting future pharmaceutical targeting and insurance risk stratification, rather than solely focusing on patient benefit.

How might this technology affect general practitioners?

General practitioners may find their initial diagnostic role devalued as patients increasingly rely on AI tools for first-line assessment. This could lead to systemic pressure to align diagnoses with AI outputs to streamline insurance processes.

Are current HIPAA regulations sufficient for protecting data used by ChatGPT Health?

HIPAA primarily governs covered entities like hospitals and insurers. The legal status of data collected by third-party AI platforms operating outside these strict definitions remains a significant gray area, posing a substantial risk to user privacy.

What keywords were targeted in this analysis?

The targeted high-volume keywords woven into the text were 'AI in healthcare,' 'ChatGPT Health,' and 'trusting AI diagnostics.'

Related News

The Great Deception: Why 'Humanizing' Tech in Education Is a Trojan Horse for Data Mining

The push to keep education 'human' while integrating radical technology hides a darker truth about data control.

The AI Deepfake Lie: Why Tech Solutions Will Never Stop Sexualized Image Generation

The fight against AI-generated sexualized images is a technological dead end. Discover the hidden winners and why detection is a losing game.

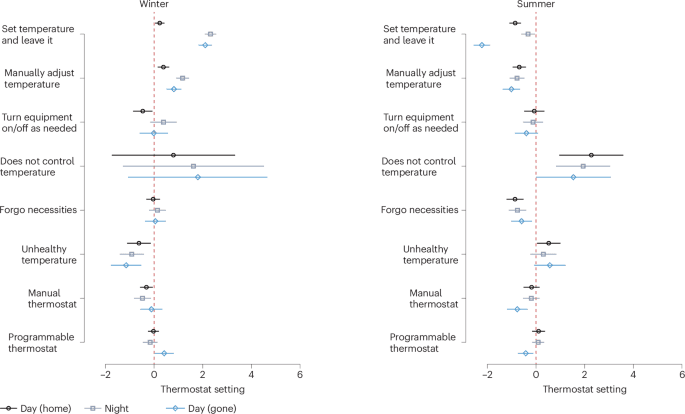

The Climate Lie: Why Your Smart Thermostat Is A Trojan Horse for Energy Control

Forget high-tech fixes. New data reveals US indoor temperature control is a behavioral battlefield, not a technological one. Who profits from this illusion?

DailyWorld Editorial

AI-Assisted, Human-Reviewed

Reviewed By

DailyWorld Editorial