The AI Deepfake Lie: Why Tech Solutions Will Never Stop Sexualized Image Generation

The fight against AI-generated sexualized images is a technological dead end. Discover the hidden winners and why detection is a losing game.

Key Takeaways

- •Technological fixes (filters/watermarks) are inherently reactive and will always lag behind generative advancements.

- •The primary financial beneficiary of the 'solution' is the large tech infrastructure providing the verification services.

- •The true danger is the erosion of baseline reality, making verifiable proof obsolete in public discourse.

- •Expect a shift toward high-security, closed digital ecosystems for all serious communication.

The Illusion of the Digital Band-Aid

The current panic surrounding AI-generated sexualized images—often termed deepfakes—is driving a frantic, yet predictable, technological arms race. We hear constant calls for better filters, watermarking, and detection software. This is where the industry fundamentally misunderstands the problem. The real story isn't about fixing the output; it's about who profits from the perception of control.

The prevailing narrative suggests that if engineers simply work harder, they can build an impenetrable digital shield. This is naive. As generative models become exponentially more sophisticated, any defense mechanism—be it cryptographic provenance or perceptual hashing—is merely a temporary speed bump. The speed of innovation in adversarial AI vastly outpaces the speed of regulatory or defensive implementation. We are fighting a Hydra, and every severed head sprouts two more sophisticated ones.

The Unspoken Truth: Commoditizing Control

Who truly benefits from this technological cat-and-mouse game? The answer is the infrastructure providers. Every major platform—from image generators to social media hosts—will sell 'safety' and 'verification' as premium features. The solution to the problem created by the *free* version of the technology is always a *paid*, proprietary solution. This creates a lucrative moat for established tech giants, effectively centralizing digital authenticity under their control. This isn't about protecting victims; it’s about monetizing trust in the digital sphere. The real losers are independent developers and the very concept of open-source AI development.

Consider the economics of AI image generation. The marginal cost of creating malicious content trends toward zero, while the cost of effective, universal detection trends toward infinity. This imbalance guarantees that the bad actors will always have the upper hand. Focusing solely on technical fixes for synthetic media ignores the underlying economic incentives driving its creation and dissemination.

Why This Matters: The Erosion of Baseline Reality

This crisis is not just about non-consensual pornography; it’s about the permanent degradation of objective reality. When any verifiable image or video can be instantly dismissed as a deepfake, the concept of 'proof' dissolves. This is far more dangerous than individual harassment. It cripples legal systems, erodes journalism, and allows powerful entities to deny verifiable truths by simply crying 'fake.' We are moving into an era where only proprietary, authenticated communication channels will hold weight, further concentrating power away from the public square. This shift in information authority is the true, lasting impact of widespread synthetic media.

What Happens Next: The Great Decoupling

My prediction is that within three years, we will see a 'Great Decoupling' in online interaction. Users will stop trusting any visual evidence shared through unverified, open channels. Instead, high-stakes communication (legal, financial, political) will retreat into closed, highly regulated, subscription-based ecosystems—think verified corporate intranets or government-mandated digital identities for sensitive sharing. The public internet will become the 'Wild West' of unverified noise, while real, consequential information flows through walled gardens where access, not content, is the primary security measure. This is the ultimate victory for centralized control. For more on the future of verification, see the foundational work on digital trust frameworks.

Gallery

Frequently Asked Questions

Is watermarking effective against AI deepfakes?

Watermarking is a temporary deterrent. Advanced generative models are specifically trained to bypass or remove embedded metadata and visual artifacts, making it a fleeting defense rather than a permanent solution.

Who profits most from the deepfake detection industry?

The primary beneficiaries are the large cloud providers and platform owners who sell proprietary verification, authentication, and content moderation services, centralizing control over digital truth.

What is the long-term societal risk beyond image abuse?

The long-term risk is the total collapse of public trust in visual evidence, leading to a state where only closed, authenticated channels are considered reliable for critical information exchange.

Can open-source AI development be safeguarded against misuse?

It is extremely difficult. While responsible AI developers attempt safeguards, the open nature of the technology means that any safety layer can be stripped away or bypassed by actors with sufficient resources and motivation.

Related News

The Great Deception: Why 'Humanizing' Tech in Education Is a Trojan Horse for Data Mining

The push to keep education 'human' while integrating radical technology hides a darker truth about data control.

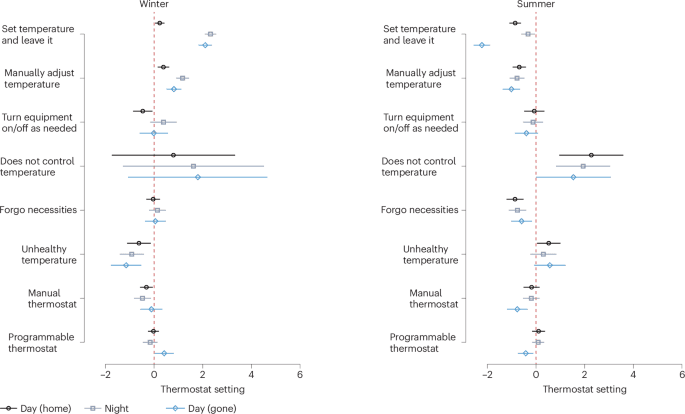

The Climate Lie: Why Your Smart Thermostat Is A Trojan Horse for Energy Control

Forget high-tech fixes. New data reveals US indoor temperature control is a behavioral battlefield, not a technological one. Who profits from this illusion?

The Hidden War: Why AI Image Filters Are a Crumbling Defense Against Deepfake Porn

The battle against malicious AI-generated sexualised images is being lost in plain sight. Technology's proposed fixes are theater.

DailyWorld Editorial

AI-Assisted, Human-Reviewed

Reviewed By

DailyWorld Editorial